Media Room

Happening Now

NCLA strives to be the go-to resource of information and impact litigation on the Administrative State

Featured News

NCLA In The News

'At the nadir of his constitutional powers': Public interest law firm urges appeals court to reject Trump's argument he has 'unlimited tariff authority'

Appeals Court Briefs Say Trump's Tariffs Are Based on a Statute That Does Not Authorize Tariffs at All

SEC Rethinks Market Surveillance Tool Some Investors Want to Axe

Subscribe to Our Media Mailing List

Stay up to date with our latest work

"*" indicates required fields

NCLA Podcast Administrative Static

Case Videos

NCLA Case Video

Simplified, et al. v. President Donald J. Trump, et al.

NCLA Case Video

Raymond J. Lucia, et al. v. Securities and Exchange Commission

NCLA Case Video

Dressen, et al. v. Flaherty, et al.

Take Action

Team Reality

The COVID-19 pandemic has proven to be a threat not only to the health and safety of Americans, but also to our way of life. Under the aegis of public safety, federal, state and local governments have violated constitutional law by implementing regulations and emergency orders by executive decree.

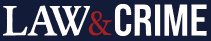

College Kangaroo Courts

If you study or work at a government-funded college and you are on the receiving end of a Title IX complaint, don’t expect justice to be served.

The Process Is the Punishment

SEC Hires Administrative Law Judges to Hear Their Case Against You. NCLA is fighting for Michelle Cochran to have her case heard before a federal judge, not an SEC appointed bureaucrat.

ATF Can't Ban Your Bump Stocks

In 2019, The Bureau of Alcohol, Tobacco, Firearms and Explosives rewrote the statute to outlaw bump stocks. This change in the existing law turned hundreds of thousands of law abiding citizens into criminals overnight.

End the Gag Rule

For decades the U.S. Securities and Exchange Commission (SEC) has been silencing defendants with its Gag Rule. But this federal agency is not alone.

King George III Prize

In keeping with the spirit of the madness of March and King George, we started with a bracket of 32 nominees comprising the state and federal agencies and the bureaucrats who committed the worst abuses of civil liberties in 2022.

TV & Radio Interviews

First Major Lawsuit Filed to Stop Trump's Tariffs

Markets Fluctuate Amid Tariff Counfusion

Florida Stationary Company Files First Lawsuit Challenging Trump's China Tariffs

SCOTUS Guts the Administrative State | Chevron is Overturned

Do Federal Agencies Have Too Much Power?

Gun Owners FIRE BACK At Bump Stock BAN, SCOTUS To Decide: Rising Reacts

NCLA's Margot Cleveland on Stinchfield Tonight

Biden Administration Pressured Social Media to Silence Critique of Its COVID-19 Responses

The Federalist's Mollie Hemingway Talks About NCLA's 1st Amendment Lawsuit Against State Department

Supreme Court Takes Up Potential Landmark Case From New Jersey Fisherman

Legal Battle to Limit Federal Regulatory Power

Fishermen Case Could Impact Key Fed Regulations

Supreme Court Weighs Costly Fishing Mandate

Longest Serving Federal Cir. Court Judge Suspended Due to Age Concerns

Biden Loses Covid Case

US Gov't Repeatedly Violated 1st Amd by Censoring Social Media, w/ Plaintiffs' Lawyer Jenin Younes

Sheng Li on New Covid-19 Mandates

The Student Loan Standoff

Government Blocking Speech Based on Views: Counsel

President Tries to Salvage Student Debt Cancellation

Groups Sue Biden Over Student Loan Handout

Biden Moves to Plan B on Student Loan Bailout

Mark Chenoweth on Biden's Unlawful Student Loan Debt Cancellation

Lawsuit Targets MA Covid-Tracking App

Mass. Health Officials, Google Allegedly Put Tracking Software on Phones During Pandemic

Supreme Court Strikes Down Student Loan Cancellation Plan

NCLA Defends Constitutionally-Protected Speech

Supreme Court Strikes Down Biden Student Loan Bailout

The Dark Truth About Government Censorship

Real America's Voice: Changizi v. HHS

Real America's Voice: Changizi v. HHS

Social Media Censorship In Focus

COVID Vaccine-Injured Sue Biden Administration over Censorship

Relevancia de Imparcialidad en Regulación del Ejercicio Profesional (Spanish)

IRS Actions ‘Disturbing’: Rights Group

Jenin Younes on California's Law That Censors Physicians

Mass. & Google Installed Covid Spyware on Peoples' Phones Without Permission?

California Judge Temporarily Blocks Covid Misinformation Law

Court Strikes Down Trump-Era Bump Stock Ban

Jenin Younes: Big Tech Colluding With Government on Pandemic Censorship

Doctor Who Resisted Covid Narrative Shadowbanned

Holding Government Officials Accountable

Lawsuit Alleges MA DPH Secretly Tracked People During Covid

Twitter's Midterm Misinformation Policy Needs to Go

Government Officials Deposed in Social Media Censorship Lawsuit

Biden's Student Loan Forgiveness Plan Undermines Non-Profits

EXPOSING Media Censorship: This is a VIOLATION of the First Amendment | Jenin Younes | Centerpoint

White House Emails To Be Turned Over in Censorship Lawsuit